The shifting power dynamics of news on the Web

Monday, December 11, 2017

|

Andrew Betts |

The way news that is propagated on the web is in a constant state of fluctuation and here's the current power break down.

Over the last several years, control of news on the web has drastically shifted. Social networks and search are increasingly how we find content, and our old loyalties to our favorite publications are giving way to consumption of content from varied and ever changing sources.

Large, respectable publishers are still vital to a healthy news industry and indeed a healthy democracy, and they remain the source for much quality journalism, but their role as destination sites has been diminished. This happened partly because of aggressive online advertising techniques, including third-party scripts and embedded content which hurt the user experience. Publishers need to reassert their control and reorient their strategy around the needs of the user. The good news is that many are doing so, building high quality, lightweight progressive web apps, and new web technologies are emerging to help.

Developers working in the publishing industry can employ these techniques to ensure a positive experience for readers while still supporting the ads that some publishers depend on. This is important, because for the web to thrive, we need to encourage decentralised ownership; the web is not just Facebook, and nor should it be.

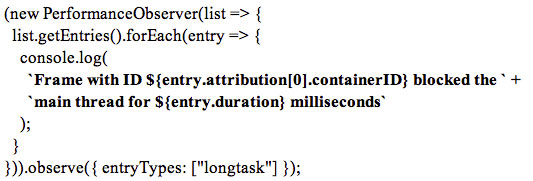

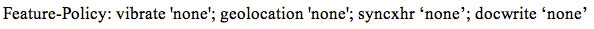

Take the Long Task API. Site owners who are building fast sites, but are hamstrung by poorly performing third parties, can now understand when third-party code is behaving badly:

Long task is in Chrome Canary right now (Google’s developer preview version of Chrome), and still being standardised.

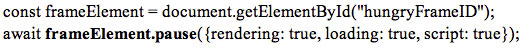

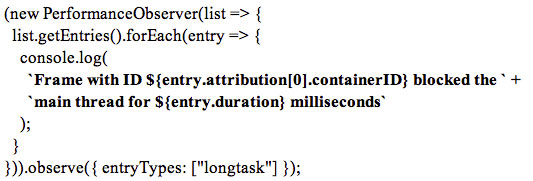

Using the PauseDocument API, poorly performing frames can have their event loop paused, then resumed later if the user tries to interact with them. Or large interactive elements, such as embedded infographics, can be paused when the page is loaded and activated only when the user interacts:

Like Long Task, PauseFrame hasn’t shipped yet. When it does, you can look forward to smarter controls enabling you to focus browser resources on things that matter most.

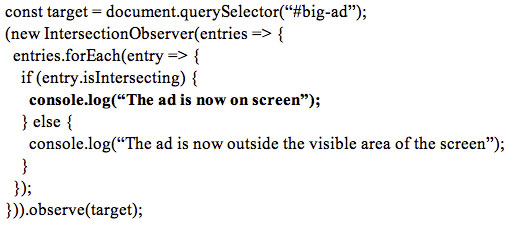

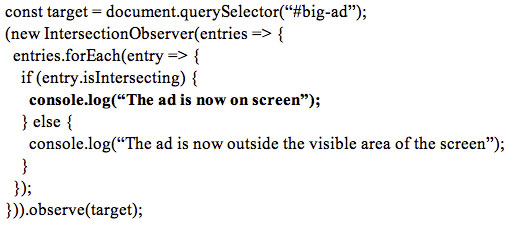

With IntersectionObserver, sites now have an efficient way to determine what is on screen and what is not currently visible, which is another great input into deciding whether to pause a frame:

Best of all, IntersectionObserver is available already in at least Chrome and Firefox, with a good quality polyfill (get it from polyfill.io) for the others, so you can use this today.

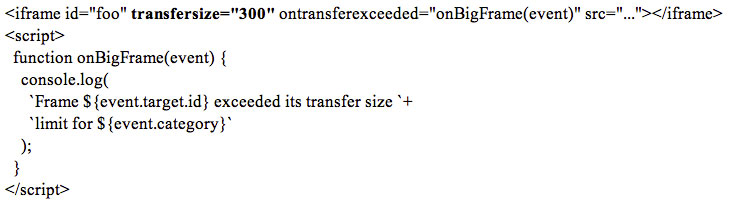

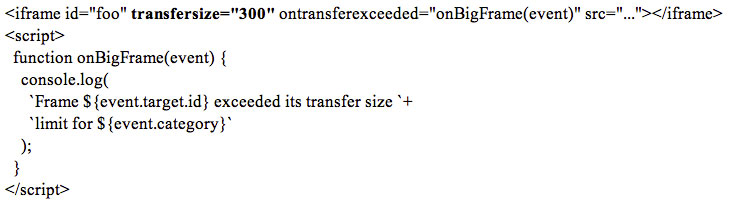

And how about setting a hard limit on the resources a third party can consume in a frame? Enter the Transfer Size policy, which can be used to limit an iframe to, say, 300KB of data use:

TransferSize policy has been kicking around for a long time, while security concerns derailed it from being implemented. In theory, knowing that a frame has hit the limit could give a site more information about that page than it should have. For instance, the Facebook homepage is much larger if you are logged in, than if you are not. Still, with some safeguards, this API should also be coming to a browser near you very soon.

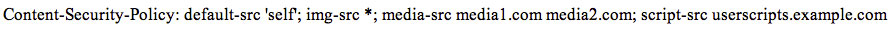

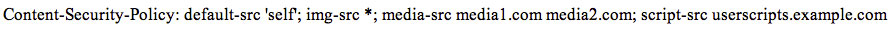

In addition to APIs being added to JavaScript and the DOM, there are efforts at the HTTP level to help enforce better behaviour. For several years now we’ve had Content Security Policy, allowing sites to limit which hostnames are allowed to serve resources into the page (and subframes):

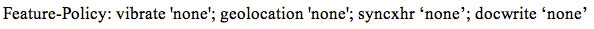

CSP is now being augmented with several other policy-like headers. Referrer policy allows sites to control whether the URL of a page is passed on to the next site in the Referrer header, closing potential privacy-compromising data leaks. Origin policy is an active proposal that would allow site-wide rules to be collected in a single document. And my favourite, Feature Policy, allows sites to selectively disable features of the web that they don’t intend to use or allow subpages to use:

Initially, feature policy will regulate access to ‘powerful’ features that require user consent to activate (like vibrate and geolocation), allowing sites to prohibit third-party code from triggering user-consent prompts. The more exciting uses will come when we add the ability to use Feature policy to turn off legacy and badly designed features of the web, such as synchronous XMLHTTPRequest, or document.write.

Together, these standards efforts shift power in a meaningful way back towards content owners, who are able to more easily build and maintain fast, secure, and engaging web content, reduce the incentive for users to prefer aggregation platforms, and enforce better standards on third parties.

Platform owners are also waking up to the need to cultivate and support content producers, to ensure the health and future of their ecosystems. Google recently relaxed its rules on so-called “first click free,” allowing publishers who charge for content to do so without having to create easy methods of circumventing the paywall. Facebook has also made changes to support paid content, and ridden back plans to keep all content within its platform.

However, more needs to be done to measure and assess the quality and accuracy of content. Search engines like Google became successful by recognising that popularity is a good measure of quality. That’s true to a point, but the recent challenges with fake news show that popularity can sometimes be a poor proxy for accuracy. In the wake of the Las Vegas mass shooting in October, Google was promoting pages from 4chan, a notoriously offensive bulletin board, among its relevant ‘top stories’. Twitter is awash with fake content, a large amount of which is actually produced by ‘verified’ accounts (so much so that they recently suspended new verifications while they figure out what verification is actually for). Facebook recently acknowledged that “malicious actors” on its platform influenced the 2016 U.S. presidential election.

Initial attempts to fix these issues include specifications for “fact checking” microdata formats, such as schema.org’s ClaimReview, which is now supported in Google search. Facebook also has said it will “sacrifice profits” for better security (some might argue that this is hardly a sacrifice but simply the need to afford users and democratic society a basic level of respect).

Provenance and trust are hard things to get right. Who has authority to make that determination? And who watches the watchers? In a connected global community of billions, where information (or disinformation) can reach a huge audience at almost zero cost, the power structures and dynamics of content on the web are something that can ultimately affect all of us in a profound way.

As developers, we have the power to effect change here. Even small feature changes to the web platform can act to shift power, change incentives, and eventually change the way we consume content online.

This content is made possible by a guest author, or sponsor; it is not written by and does not necessarily reflect the views of App Developer Magazine's editorial staff.

Large, respectable publishers are still vital to a healthy news industry and indeed a healthy democracy, and they remain the source for much quality journalism, but their role as destination sites has been diminished. This happened partly because of aggressive online advertising techniques, including third-party scripts and embedded content which hurt the user experience. Publishers need to reassert their control and reorient their strategy around the needs of the user. The good news is that many are doing so, building high quality, lightweight progressive web apps, and new web technologies are emerging to help.

Developers working in the publishing industry can employ these techniques to ensure a positive experience for readers while still supporting the ads that some publishers depend on. This is important, because for the web to thrive, we need to encourage decentralised ownership; the web is not just Facebook, and nor should it be.

New technology can help

Take the Long Task API. Site owners who are building fast sites, but are hamstrung by poorly performing third parties, can now understand when third-party code is behaving badly:

Long task is in Chrome Canary right now (Google’s developer preview version of Chrome), and still being standardised.

Using the PauseDocument API, poorly performing frames can have their event loop paused, then resumed later if the user tries to interact with them. Or large interactive elements, such as embedded infographics, can be paused when the page is loaded and activated only when the user interacts:

Like Long Task, PauseFrame hasn’t shipped yet. When it does, you can look forward to smarter controls enabling you to focus browser resources on things that matter most.

With IntersectionObserver, sites now have an efficient way to determine what is on screen and what is not currently visible, which is another great input into deciding whether to pause a frame:

Best of all, IntersectionObserver is available already in at least Chrome and Firefox, with a good quality polyfill (get it from polyfill.io) for the others, so you can use this today.

And how about setting a hard limit on the resources a third party can consume in a frame? Enter the Transfer Size policy, which can be used to limit an iframe to, say, 300KB of data use:

TransferSize policy has been kicking around for a long time, while security concerns derailed it from being implemented. In theory, knowing that a frame has hit the limit could give a site more information about that page than it should have. For instance, the Facebook homepage is much larger if you are logged in, than if you are not. Still, with some safeguards, this API should also be coming to a browser near you very soon.

In addition to APIs being added to JavaScript and the DOM, there are efforts at the HTTP level to help enforce better behaviour. For several years now we’ve had Content Security Policy, allowing sites to limit which hostnames are allowed to serve resources into the page (and subframes):

CSP is now being augmented with several other policy-like headers. Referrer policy allows sites to control whether the URL of a page is passed on to the next site in the Referrer header, closing potential privacy-compromising data leaks. Origin policy is an active proposal that would allow site-wide rules to be collected in a single document. And my favourite, Feature Policy, allows sites to selectively disable features of the web that they don’t intend to use or allow subpages to use:

Initially, feature policy will regulate access to ‘powerful’ features that require user consent to activate (like vibrate and geolocation), allowing sites to prohibit third-party code from triggering user-consent prompts. The more exciting uses will come when we add the ability to use Feature policy to turn off legacy and badly designed features of the web, such as synchronous XMLHTTPRequest, or document.write.

Together, these standards efforts shift power in a meaningful way back towards content owners, who are able to more easily build and maintain fast, secure, and engaging web content, reduce the incentive for users to prefer aggregation platforms, and enforce better standards on third parties.

Corporate and public policy

Platform owners are also waking up to the need to cultivate and support content producers, to ensure the health and future of their ecosystems. Google recently relaxed its rules on so-called “first click free,” allowing publishers who charge for content to do so without having to create easy methods of circumventing the paywall. Facebook has also made changes to support paid content, and ridden back plans to keep all content within its platform.

However, more needs to be done to measure and assess the quality and accuracy of content. Search engines like Google became successful by recognising that popularity is a good measure of quality. That’s true to a point, but the recent challenges with fake news show that popularity can sometimes be a poor proxy for accuracy. In the wake of the Las Vegas mass shooting in October, Google was promoting pages from 4chan, a notoriously offensive bulletin board, among its relevant ‘top stories’. Twitter is awash with fake content, a large amount of which is actually produced by ‘verified’ accounts (so much so that they recently suspended new verifications while they figure out what verification is actually for). Facebook recently acknowledged that “malicious actors” on its platform influenced the 2016 U.S. presidential election.

Initial attempts to fix these issues include specifications for “fact checking” microdata formats, such as schema.org’s ClaimReview, which is now supported in Google search. Facebook also has said it will “sacrifice profits” for better security (some might argue that this is hardly a sacrifice but simply the need to afford users and democratic society a basic level of respect).

Small steps

Provenance and trust are hard things to get right. Who has authority to make that determination? And who watches the watchers? In a connected global community of billions, where information (or disinformation) can reach a huge audience at almost zero cost, the power structures and dynamics of content on the web are something that can ultimately affect all of us in a profound way.

As developers, we have the power to effect change here. Even small feature changes to the web platform can act to shift power, change incentives, and eventually change the way we consume content online.

This content is made possible by a guest author, or sponsor; it is not written by and does not necessarily reflect the views of App Developer Magazine's editorial staff.

Become a subscriber of App Developer Magazine for just $5.99 a month and take advantage of all these perks.

MEMBERS GET ACCESS TO

- - Exclusive content from leaders in the industry

- - Q&A articles from industry leaders

- - Tips and tricks from the most successful developers weekly

- - Monthly issues, including all 90+ back-issues since 2012

- - Event discounts and early-bird signups

- - Gain insight from top achievers in the app store

- - Learn what tools to use, what SDK's to use, and more

Subscribe here