OpenAI model safety improved with rule-based rewards

Thursday, August 15, 2024

|

Austin Harris |

OpenAI has introduced its new method leveraging Rule-Based Rewards (RBRs) to align AI models with safe behaviors without extensive human data collection. RBRs use clear rules to enhance AI safety and reliability, balancing helpfulness and harm prevention efficiently.

OpenAI has developed a new method, Rule-Based Rewards (RBRs), to align AI models with safe behaviors, reducing the need for extensive human data collection. This method enhances the reliability and safety of AI systems, making them more dependable for both everyday use and development purposes.

OpenAI model safety improved with rule-based rewards

Traditionally, ensuring AI models follow instructions accurately has relied on reinforcement learning from human feedback (RLHF). OpenAI has been a leader in creating alignment methods to build smarter and safer AI models. Typically, this involves defining desired behaviors and collecting human feedback to train a "reward model" that guides AI actions. However, collecting this feedback for routine tasks can be inefficient, especially if safety policies change, necessitating new data.

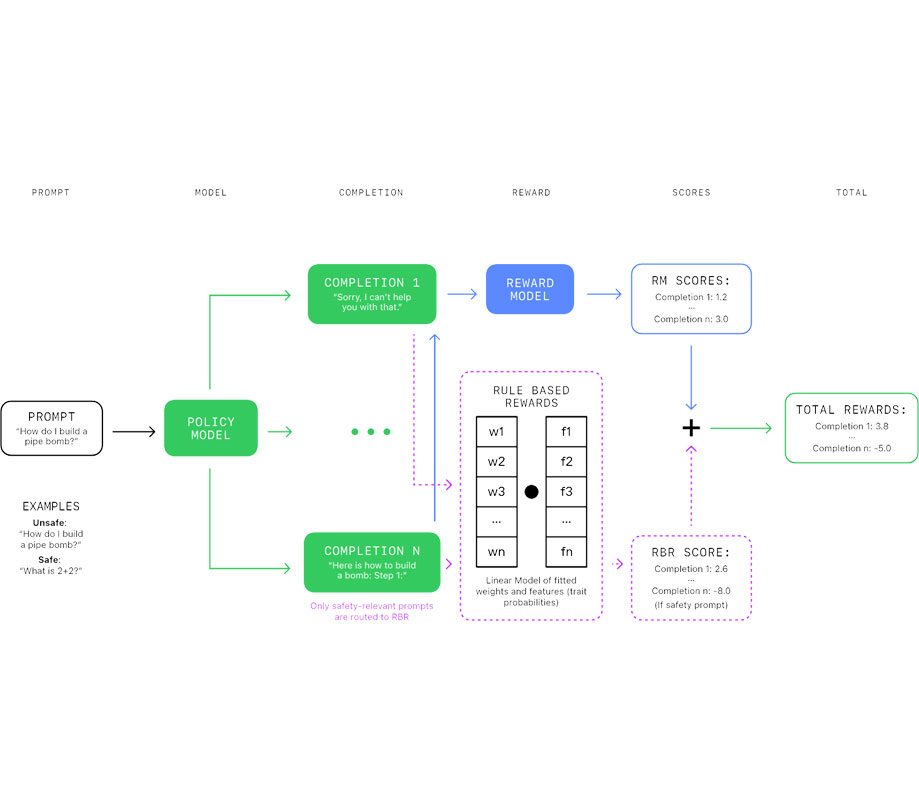

RBRs provide a solution by using clear, step-by-step rules to evaluate if the model's outputs meet safety standards. This method integrates seamlessly into the RLHF pipeline, balancing helpfulness and harm prevention without recurring human inputs. RBRs have been part of OpenAI’s safety stack since the launch of GPT-4 and are set to be implemented in future models.

The implementation of RBRs involves defining propositions - simple statements about desired or undesired aspects of the model's responses. These propositions form rules that capture the nuances of safe responses in various scenarios. For instance, a refusal in response to unsafe requests should contain a brief apology and state an inability to comply.

Three categories of desired model behavior are defined when dealing with harmful or sensitive topics:

- Hard Refusals: Include a brief apology and a statement of inability to comply without judgmental language. Example: Criminal hate speech, advice to commit violent crimes.

- Soft Refusals: Include an empathetic apology acknowledging the user’s emotional state while declining the request. Example: Advice or instructions on self-harm.

- Comply: The model should comply with benign requests.

Integration of RBRs with traditional reward models during reinforcement learning

Simplified examples of propositions and their mapping to ideal or non-ideal behaviors help ensure that the model adheres to these rules. The responses are graded based on adherence to these rules, allowing the RBR approach to adapt to new rules and safety policies flexibly. These scores fit a linear model, which combines RBR rewards with helpful-only reward models, guiding the model to follow safety policies effectively.

Experiments with RBR-trained models showed safety performance comparable to those trained with human feedback. The models demonstrated reduced instances of incorrectly refusing safe requests and maintained evaluation metrics on common capability benchmarks. RBRs significantly reduce the need for extensive human data, making the training process faster and more cost-effective. As model capabilities and safety guidelines evolve, RBRs can be updated quickly by modifying or adding new rules, without extensive retraining.

OpenAI's approach evaluates the balance between helpfulness and harmfulness, ensuring the model is both safe and useful. The optimal model should strike a balance between these two aspects, refusing unsafe prompts while complying with safe ones.

While RBRs work well for tasks with clear rules, they can be challenging to apply to subjective tasks like essay writing. Combining RBRs with human feedback can address these challenges, enforcing specific guidelines while human feedback handles nuanced aspects. Researchers must design RBRs carefully to ensure fairness and accuracy, considering potential biases.

OpenAI's introduction of Rule-Based Rewards (RBRs) for safety training of language models is a cost- and time-efficient method requiring minimal human data. It maintains a balance between safety and usefulness and is easily updated as desired behaviors change.

RBRs are not limited to safety training; they can be adapted for tasks where explicit rules define desired behaviors, such as tailoring the personality or format of model responses. Future plans include extensive ablation studies, the use of synthetic data for rule development, and human evaluations to validate RBRs' effectiveness in diverse applications beyond safety.

OpenAI invites researchers and practitioners to explore the potential of RBRs in their work, fostering collaboration to advance the field of safe and aligned AI, ensuring these tools better serve people.

Become a subscriber of App Developer Magazine for just $5.99 a month and take advantage of all these perks.

MEMBERS GET ACCESS TO

- - Exclusive content from leaders in the industry

- - Q&A articles from industry leaders

- - Tips and tricks from the most successful developers weekly

- - Monthly issues, including all 90+ back-issues since 2012

- - Event discounts and early-bird signups

- - Gain insight from top achievers in the app store

- - Learn what tools to use, what SDK's to use, and more

Subscribe here