NVIDIA releases GPU accelerator to improve AI

Friday, March 10, 2017

|

Richard Harris |

GPU accelerator blueprints released by NVIDIA to drive AI in concurrence with Microsoft's Project Olympus.

As innovation progresses, more and more processing is being offloaded to the cloud to do the heavy lifting. But how much cloud usage is too much for cloud providers to handle efficiently? That is the answer that many companies hope never to have to answer as they ramp up their cloud usage exponentially. That’s where NVIDIA and Microsoft look to make big changes in the way cloud computing operates.

Microsoft's newest project, code named Project Olympus, is making a big buzz in the Silicon Valley community as it hopes to address these computing stumbling blocks in the way of many companies’ growing successes. In a blog post by Microsoft, Kushagra Vaid, general manager and engineer at Azure Hardware Infrastructure, said that the project was made to improve “cloud services and computing power needed for advanced and emerging cloud workloads such as big data analytics, machine learning, and Artificial Intelligence (AI).”

Project Olympus is the next generation of cloud hardware and a “new model for open source hardware development.” Built upon a solid foundation of an open source hardware development model, the project has “created a vibrant industry ecosystem for datacenter deployments across the globe in both cloud and enterprise.”

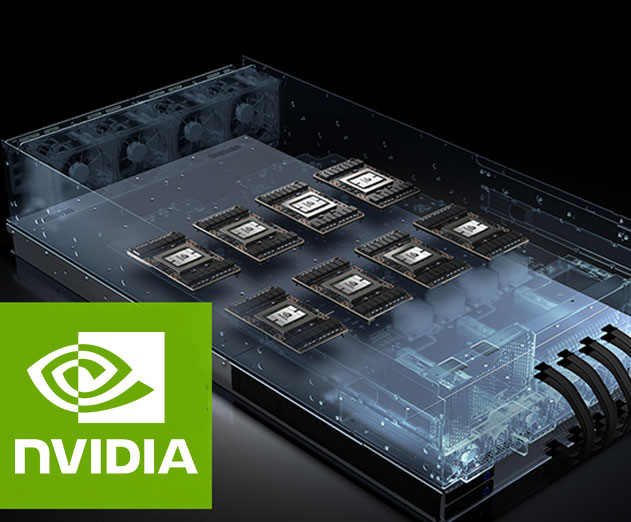

In concurrence with Project Olympus, NVIDIA has unveiled blueprints for a new hyperscale GPU accelerator to drive AI cloud computing. The projects hyperscale GPU accelerator chassis for AI, also referred to as HGX-1, is designed to support eight of the latest “Pascal” generation NVIDIA GPUs and NVIDIA’s NVLink high speed multi-GPU interconnect technology, and provides high bandwidth interconnectivity for up to 32 GPUs by connecting four HGX-1 together.

In a press release by NVIDIA, they said that “HGX-1 does for cloud-based AI workloads what ATX - Advanced Technology eXtended - did for PC motherboards when it was introduced more than two decades ago. It establishes an industry standard that can be rapidly and efficiently embraced to help meet surging market demand.”

They later went on saying that, “Cloud workloads are more diverse and complex than ever. AI training, inferencing and HPC workloads run optimally on different system configurations, with a CPU attached to a varying number of GPUs. The highly modular design of the HGX-1 allows for optimal performance no matter the workload. It provides up to 100x faster deep learning performance compared with legacy CPU-based servers, and is estimated at one-fifth the cost for conducting AI training and one-tenth the cost for AI inferencing.”

It is important to note that this is the first (Open Compute Project)OCP server design to offer a broad choice of microprocessor options fully compliant with the Universal Motherboard specification to address virtually any type of cloud computing workload.

Microsoft's newest project, code named Project Olympus, is making a big buzz in the Silicon Valley community as it hopes to address these computing stumbling blocks in the way of many companies’ growing successes. In a blog post by Microsoft, Kushagra Vaid, general manager and engineer at Azure Hardware Infrastructure, said that the project was made to improve “cloud services and computing power needed for advanced and emerging cloud workloads such as big data analytics, machine learning, and Artificial Intelligence (AI).”

Project Olympus is the next generation of cloud hardware and a “new model for open source hardware development.” Built upon a solid foundation of an open source hardware development model, the project has “created a vibrant industry ecosystem for datacenter deployments across the globe in both cloud and enterprise.”

NVIDIA's new GPU accelerator

In concurrence with Project Olympus, NVIDIA has unveiled blueprints for a new hyperscale GPU accelerator to drive AI cloud computing. The projects hyperscale GPU accelerator chassis for AI, also referred to as HGX-1, is designed to support eight of the latest “Pascal” generation NVIDIA GPUs and NVIDIA’s NVLink high speed multi-GPU interconnect technology, and provides high bandwidth interconnectivity for up to 32 GPUs by connecting four HGX-1 together.

In a press release by NVIDIA, they said that “HGX-1 does for cloud-based AI workloads what ATX - Advanced Technology eXtended - did for PC motherboards when it was introduced more than two decades ago. It establishes an industry standard that can be rapidly and efficiently embraced to help meet surging market demand.”

They later went on saying that, “Cloud workloads are more diverse and complex than ever. AI training, inferencing and HPC workloads run optimally on different system configurations, with a CPU attached to a varying number of GPUs. The highly modular design of the HGX-1 allows for optimal performance no matter the workload. It provides up to 100x faster deep learning performance compared with legacy CPU-based servers, and is estimated at one-fifth the cost for conducting AI training and one-tenth the cost for AI inferencing.”

It is important to note that this is the first (Open Compute Project)OCP server design to offer a broad choice of microprocessor options fully compliant with the Universal Motherboard specification to address virtually any type of cloud computing workload.

Become a subscriber of App Developer Magazine for just $5.99 a month and take advantage of all these perks.

MEMBERS GET ACCESS TO

- - Exclusive content from leaders in the industry

- - Q&A articles from industry leaders

- - Tips and tricks from the most successful developers weekly

- - Monthly issues, including all 90+ back-issues since 2012

- - Event discounts and early-bird signups

- - Gain insight from top achievers in the app store

- - Learn what tools to use, what SDK's to use, and more

Subscribe here

_r2f0ox12.jpg&width=800)