Insider’s View of a Real World Project and The Role of User Modeling in Performance Test Development

Friday, March 27, 2015

|

Terri Calderone |

Developing a composite application can be a complex and convoluted process, but when a company needs to test that system, the task is even more daunting. When development and testing teams operate with a mindset that favors siloed processes, the challenge increases.

I was recently involved with a project where a resort management firm was attempting to migrate its antiquated, siloed reservation and fulfillment performance testing methodology to full composite application testing.

Adopting a testing methodology to fit a modern composite architecture involves many of the same procedures commonly found within traditional performance testing. However, due to the increased complexity and test data requirements for scripts and scenario development, achieving success requires a concerted and sometimes customized effort. We used several of the mechanisms common to performance test development for this project while enhancing them as needed to produce a better final outcome.

One of the methods which was pivotal to the success of the project was user modeling. Performance testers developed and simulated models of user workloads, including user/role specific profiles, customized test scenarios, and realistic test data beds to help them build performance tests representative of production. For the purposes of this article, we will focus on the user modeling and simulation components of the project.

Project Background

The reservation and fulfillment system represented an amalgamation of multiple applications, including multiple booking client GUIs, a centralized reservation system, and a homegrown bus/messenger. The system had become increasingly complex and unwieldy as the application development teams devised individualized solutions to various requests over time. These solutions were often created without sufficient consideration for how their work would impact the rest of the system.

Due to the fractured nature of the effort, the original testing team of five was finding it impossible to keep up with the changes. There was no composite suite of tests that the testing team could execute to see a complete picture of overall system performance prior to each release. As a result of this siloed approach, problems in production were common and difficult to replicate in the test environments.

Over time, the system had grown to more than 15 user interfaces and 30 middleware systems. It served both external and internal users and had a quarterly release cycle. Releases involved a huge number of changes across the production system. The test model utilized to support each release was still extremely fragmented and the performance team was eager to move to a singular solution for assessing the impact of changes to the system.

Tackling the Transition

Needless to say, unifying the individual test scenarios and scripts into an integrated, highly functional composite test architecture wasn’t an easy project. The new test architecture would allow for greater flexibility and produce more reliable results, but it would also require the test script owners to rethink script standards and development methods. Furthermore, it would create complexity in testing requirements, data management and process organization.

A quick look at scripting and execution offers ample evidence of the complexity in this regard. The testing team had used transaction naming consistently across all test scenarios. This approach worked well in single applications with fewer than 10 scripts and an average of 10 transactions per script.

With the composite architecture involving 15 applications (with the same number of scripts and transactions), the number of transaction timers could scale to as many as 1,500, making test execution difficult and performance evaluation and reporting next to impossible. To alleviate the overload of information, three to five Key Performance Indicators (KPIs) were chosen by the organization to represent application performance. This allowed the development and test teams to focus on areas of the application that were important to the customer and its users.

User Load Modeling

As mentioned earlier, user modeling was only one component of the project. There were other integrated pieces of the puzzle, such as a robust KPI Performance Scorecard and a homegrown test data management system, also playing important roles. However, user load modeling and simulation was a vital exercise that made composite architecture testing feasible. The user load models created a clear overview of the application for both project teams and business users and helped define the frequency of system events. They also could be mapped directly into the new performance test scripts and scenarios.

In orchestrating the user modeling and simulation exercise, Orasi and the company team members had to take into consideration the unique challenges presented by the prior concurrent development paths that each individual application test process had taken. With 15 separate scenarios and 10 scripts per scenario, running a composite level test was a mammoth undertaking that was hard for a team of any size to orchestrate, let alone a team of five.

Script updates would take weeks with the weekly build schedule and it would be impossible to keep up with them. In many cases, duplicate code within each script meant that a single update had to be done repeatedly if it occurred multiple times in one or more applications.

Consequently, the core goals determined by Orasi and the performance team for the new test architecture were to reduce script maintenance, develop more effective performance tests, and improve team communication. The team was confident the changes would also satisfy the production-side goals of increased application throughput and a reduced incidence of outages.

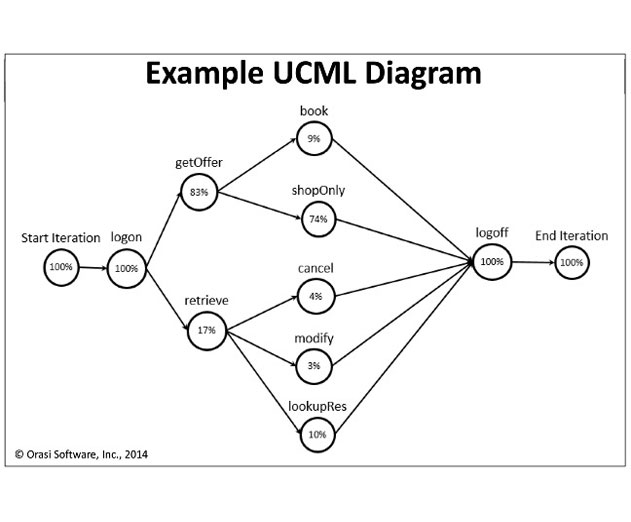

Working with the customer’s development teams, Orasi devised a UCML (User Community Modeling Language) diagram for each application. To accomplish this goal, each tester mapped the previously identified KPIs to common actions they had extracted from production logs gathered from the busiest month. That data was analyzed to determine the busiest hour and used to build the application simulation models.

The UCML diagrams included not only KPI actions but also non-KPI user actions, such as a reservation search not being booked. Once the user actions had been mapped to the transactions, the actions could be mapped to the script code, putting in place the final bit of information needed to create a UCML diagram for each application. The team then reviewed and confirmed each UCML diagram with business analysts and stakeholders and proceeded to develop a single, modularized script that emulated the entire application workflow.

Final Outcome

With such a clearly defined view of the entire system and how the system and its individual components interacted, testers were in a much better position to develop meaningful performance tests for individual applications and processes. Because they were being developed from a unified perspective, the tests would also ensure each individual development effort was moving toward a serviceable whole.

A positive outcome of the improvement in the efficiency of developing and executing performance tests was an increase in test coverage across the organization. The testing team has increased coverage through the inclusion of additional applications which were not being tested previously. In the first year after the transition, the team was able to test 10 additional non-composite applications as one-offs during their composite release cycles.

All goals set out by Orasi and the company were met. After every release, the developing company conducts product incident analyses to see what, if anything, was missed and take appropriate action. Overall, the composite application for reservations and fulfillment has experienced significant performance improvement, greater release stability and increased coverage with far fewer outages.

Read more: http://www.orasi.com/Pages/default.aspx

This content is made possible by a guest author, or sponsor; it is not written by and does not necessarily reflect the views of App Developer Magazine's editorial staff.

Become a subscriber of App Developer Magazine for just $5.99 a month and take advantage of all these perks.

MEMBERS GET ACCESS TO

- - Exclusive content from leaders in the industry

- - Q&A articles from industry leaders

- - Tips and tricks from the most successful developers weekly

- - Monthly issues, including all 90+ back-issues since 2012

- - Event discounts and early-bird signups

- - Gain insight from top achievers in the app store

- - Learn what tools to use, what SDK's to use, and more

Subscribe here