Big Data

The Intersection of Big Data Analytics and Software Development: Why You Should Be There

Tuesday, September 27, 2016

|

Don Vilsack |

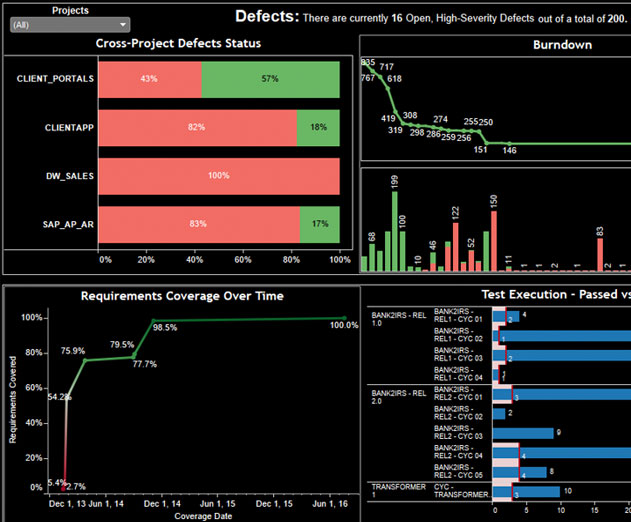

Despite the proven value of collecting raw software project data and analyzing it to create actionable, easily digested key performance indicators (KPIs), many firms still struggle to extract, analyze, and organize this data into reports—let alone dashboards or scorecards. If this sounds like your organization, don’t be surprised. In my experience, only a handful of firms have been able to implement truly effective, streamlined reporting systems—and many teams and their leaders don’t even recognize what they are missing.

In some cases, team members assume that because their various reports provide interesting information, they have gone far enough. Others are so bogged down with time-consuming processes such as manual data collection and report generation that they cannot envision anything beyond the next report. Something as basic as creating an end-of-day testing report for management continues to be a tedious and time-consuming manual task.

This hurts productivity across the board. Not only are testers bogged down with generating manual reports, but in many cases, the reports aren’t comprehensive enough to provide meaningful decision-making information. This can cause reverberations across the enterprise.

For example, if reports aren’t sufficiently insightful, then management might not realize defect rates are rising. No one takes action to pinpoint and correct the problem, and defects are discovered too late for developers to fix them before the release goes into production. User frustration ensues, and no one is happy.

Although producing accurate, accessible information such as defect reports won’t guarantee software quality, without them it’s nearly impossible to achieve it. To improve the situation, numerous companies have developed—or incorporated into existing platforms—functionality that tracks and organizes (at a basic level) data related to software team activities.

However, the functionality to automatically extract this raw data, analyze it, and convert it into actionable metrics and KPIS has remained elusive.

Now, teams frustrated with the tedium and complexity of reporting are finding help from an unexpected quarter—technologies developed to harness "big data"—colossal, complex datasets that traditional data-processing applications cannot effectively process.

Big Data Versus Software Data: Where Is the Connection?

Processing datasets of more than five petabytes (one definition of big data)—especially those incorporating unstructured data from myriad sources—requires incredibly fast processors, paired with sophisticated analytics software to identify the patterns and associations that provide meaningful feedback. Also, organizations must have a mechanism to visualize this information.

Over the past decade, a cadre of innovative enterprises have developed the technologies to accomplish these goals and wrapped them into analytics platforms that are already revolutionizing fact-based decision making in other areas. So, what does this have to do with reporting for software activities?

Leadership in progressive, quality-focused organizations recognized that the challenges of harnessing and visualizing big data were a close parallel to the hurdles experienced by software teams. Although software project activity data might appear to be a world away from big data, it shares a few notable similarities with unstructured big data. It is not easily accessible, natively, nor is it properly organized for data analysis and reporting. It is often unstructured, as well.

In other words, the obstacles previously preventing big data from being useful are similar to those keeping software teams mired in inadequate, time-consuming, manual reporting. As such, the requirements for developing a functional, automated analytics and visualization/reporting solution for software project data are similar, as well.

Envisioning a Better System

If only organizations could harness these technologies, they could drive near-real-time analysis and reporting to support informed decision making during the software development and testing process. This information would be especially valuable for continuous integration and agile efforts, as well as for DevOps teams having a hard time achieving integrated communication and collaboration—a major stumbling block in adopting this approach.

However, as with big data analytics, it wouldn’t be enough to analyze one data stream—from one tester or even one team. Similarly, the results wouldn’t be reliable or conclusive if team members were to cherry pick the metrics that were easy to pull. To provide full value, the solution would need to automatically extract all available data from tools like HPE Application Lifecycle Management or JIRA Software—and preferably both, as well as from any other tools being used.

The consolidated data extraction would ensure sufficient information to cover everything—teams, projects, and other activities. Then, there would be a means to normalize the data to remove inconsistencies between values, fields, and other disparate naming conventions prior to analytics and visualization. Resulting KPIS would be both broad and deep, ranging from the number of tests that passed, failed, and were blocked in a day, to defect distribution by severity, status, or root cause, trending of defects, and beyond.

At the visualization end, users would be able to consume a variety of data formats—reports, dashboards, and scorecards, for instance, and be able to create their own views. Furthermore, users would be able to see high-level results or drill down deeper into the most detailed specifics.

Out of Conjecture and into the Real World

Today, the evolution described above is becoming reality. A handful of enterprises are working at varying levels to help software teams better analyze and visualize their test activity data using big data technologies.

Here at Orasi, we have transformed an earlier edition of our automated reporting tool, Orasi Advanced Reporting Solution (OARS) to meet the goals mentioned above, and more. For the automation and normalization functions, we worked with Alteryx, a leader in self-service data analytics. For the visualization features, we partnered with Tableau Software, a global leader in business analytics software.

Our consultants have already witnessed the benefits that come with implementing such a solution—where automated, fully analyzed, and organized project activity data is made easily and comprehensively consumable, on demand. These benefits include but are not limited to:

- Developers and testers can identify workload peaks and troughs that might cause problems—and track completion statuses across the entire software lifecycle.

- Project managers can spot problems earlier and reallocate resources as needed to maintain quality. They can also monitor trends to stop problems in their tracks.

Executives can track project health and monitor visibility across the entire project portfolio.

It’s an exciting time, especially for quality-focused organizations such as ours, and we look forward to seeing how these technologies will allow software project (and quality) management to evolve.

New Horizons for Software Delivery and Value

Processing and visualizing software project data in near-real time has incredible potential to facilitate decisions that expedite release cycles and boost quality. However, it isn’t the only way big data can make its way into software development and testing—and even quality assurance. A powerful synergy is building that will enable software teams to act more quickly and proactively upon a wide variety of data, including the big data that drove the technological transformation we described earlier.

Here is just one example. Data monitoring of unstructured data feeds such as social media has already become commonplace among customer satisfaction teams. If a key influencer with millions of followers—a celebrity, for instance—pronounces he or she doesn’t like a product, the effect will be felt at a retailer’s cash registers within days, if not sooner. Near-real-time big data analytics are enabling companies around the globe to learn of and address user dissatisfaction issues right away rather than weeks or months later.

It shouldn’t be long before this type of information makes its way into software user experience efforts—because it must. If a power celebrity such as Taylor Swift tells four million fans that she doesn’t like a new music app because it lacks a certain feature, it will do the organization little good to integrate that feature a year later. The app will be dying or dead by then.

It’s entirely possible that “user activity” monitoring tools could soon be integrated with production monitoring platforms, enabling firms to receive near-real-time information, not only when users are experiencing functionality problems with their apps but also when they are complaining to their friends about them on Facebook.

Such functionality will be a final piece in the quality puzzle, because savvy organizations will already have used the type of analytics we described earlier to better manage their software processes, minimizing defects and watching user satisfaction climb.

Read more: http://appdevelopermagazine.com/partner/link/?ref=

This content is made possible by a guest author, or sponsor; it is not written by and does not necessarily reflect the views of App Developer Magazine's editorial staff.

Become a subscriber of App Developer Magazine for just $5.99 a month and take advantage of all these perks.

MEMBERS GET ACCESS TO

- - Exclusive content from leaders in the industry

- - Q&A articles from industry leaders

- - Tips and tricks from the most successful developers weekly

- - Monthly issues, including all 90+ back-issues since 2012

- - Event discounts and early-bird signups

- - Gain insight from top achievers in the app store

- - Learn what tools to use, what SDK's to use, and more

Subscribe here