How StreamSets Simplifies Setting Up New Ingest Pipelines

Friday, March 4, 2016

|

Stuart Parkerson |

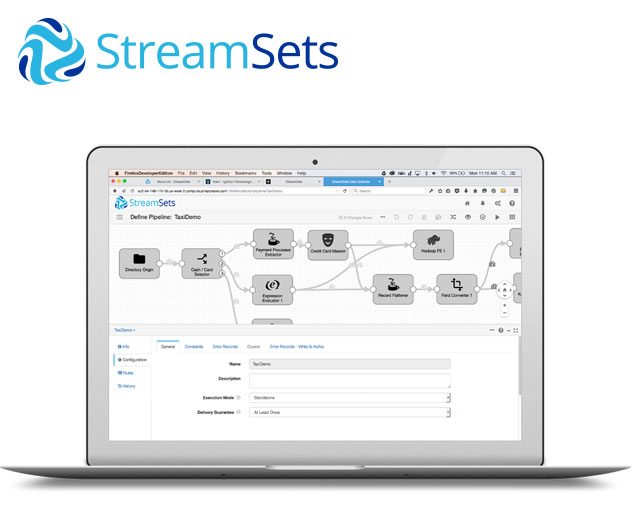

We recently visited with Girish Pancha, CEO of Streamsets, to talk about how the company’s open source software is used to build and operate reliable ingest pipelines. StreamSets’ Data Collector is a low-latency ingest infrastructure tool that provides the ability to create continuous data ingest pipelines using a drag and drop UI within an integrated development environment (IDE).

ADM: How is StreamSets different from other data services and solutions available in the same space?

Pancha: For developers, StreamSets greatly simplifies setting up new ingest pipelines. The service makes it very simple to plug in a new component, test it then place it into production, without having to write custom code. It runs on organizations’ existing clusters and operates 100 percent in-memory so it scale in lockstep with the rest of a customer’s infrastructure.

For operators, StreamSets provides complete control over, and visibility into, customers’ Big Data pipelines. The StreamSets Data Collector gives visibility to data in motion by assessing the quality of both the data flow and the data itself at each step of the ingest pipeline, testing data for anomalous conditions, alerting on these conditions and re-routing for automated cleansing.

Other offerings in this space cannot inspect the data in real-time, which means that they cannot provide early warning or automated handling and have limited value in helping organizations detect and get to the root cause of data accuracy problems. StreamSets’ solution can provide an early warning when data structure or schema starts to drift. Data drift often means that the data for downstream analysis is incorrect information.

Ultimately, The StreamSets Data Collector can detect data drift whereas other solutions cannot.

ADM: What is data drift and how is it impacting organizations?

Pancha: Data drift is defined as the unpredictable, unannounced and unending mutation of data schema and semantics caused by the constant changes in systems generating the data at the source. It’s a natural consequence of an increasing reliance on “other people's’ systems” for data generation. These systems are locally optimized upstream; however, upstream optimization impacts downstream data integrity. The following data integrity issues often arise:

- Data loss: The data no longer fits what organizations expect.

- Data corrosion: The meaning has changed but downstream systems are not aware of the semantic drift.

When organizations experience data drift, it impacts them in multiple ways. They pass bad data to consuming apps, which then generate incorrect insights. When incorrect insights arise, organizations’ trust in the whole data operation can start to erode. Data engineers and scientists spend too much time on finding and fixing these data issues — “janitorial” or “fire-fighting” tasks — which adds cost and makes those employees less available to meet new business requirements.

ADM: How does StreamSets solve this issue of data drift?

Pancha: As StreamSets watches the data flows, it can inspect the actual data records, detecting anomalies as data drifts from historical patterns. Then the StreamSets user can decide how to address the drift, perhaps by transforming the data in-stream so it arrives consumable despite the drift, or adjusting downstream applications to the new reality.

ADM: Who can benefit from using StreamSets and what are the advantages?

Pancha: StreamSets can be thought of as general purpose ingest infrastructure. Any enterprise that ingests data - streams and or batch data - can benefit from StreamSets’ solution. Data engineers get a visual UI to connect data sources to destinations and make a variety of built-in transformations to sanitize the data while it is in motion. Customers can also script custom transforms. StreamSets is resistant to data drift because, unlike traditional ETL tools, it doesn’t rely on schema and uses a standard record format to facilitate complete visibility into the data flow and automated handling of drift conditions.

Ultimately, customers make their data engineers productive by reducing hand coding; they receive an ingest system that delivers clean, ready-to-use data reliably; and, they achieve the ability to monitor data flow in real time, including early warning and the ability to diagnose each step in the pipeline when trouble occurs.

ADM: What projects or use cases do organizations use StreamSets for?

Pancha: StreamSets provides the highest value in cases where customers have numerous evolving source endpoints that deliver data that must be arrive in a high-quality state for immediate analysis. Organizations who adopt StreamSets value its ability to both simplify pipeline development and provide visibility and control over the operation of those pipelines.

As an example, Lithium uses the StreamSets Data Collector with its messaging fabric to enable near-real-time data flow. StreamSets is a binding agent that helps them un-silo, transform and route their data across hundreds of nodes with visibility and control.

Another example is with Cisco, who uses StreamSets as part of their InterCloud offering and values its ability to automatically handle infrastructure changes and its ability to provide intelligent monitoring and dynamic shaping of internal operational logs and multi-datacenter ingestion logs.

Four patterns we see in the market are: ingestion to Hadoop, ingestion to big data search tools, ingesting log data, and as a code-free way to consume into and produce from Kafka.

ADM: How and why did you come up with this solution? Has anything like it been available in the past?

Pancha: My partner, Arvind Prabhakar, and I came up with StreamSets as we felt that the legacy technologies were not addressing the pain points big data users were experiencing as they struggled to onboard data. We are just now getting to a maturity level where enterprises are taking on how to operationalize Big Data ingest, and to not just treat it as a series of projects.

This maturity was achieved for traditional data warehouses previously via data integration tools, but those solutions are not fit-for-purpose in a real-time big data world plagued by data drift.

Read more: https://streamsets.com/

Become a subscriber of App Developer Magazine for just $5.99 a month and take advantage of all these perks.

MEMBERS GET ACCESS TO

- - Exclusive content from leaders in the industry

- - Q&A articles from industry leaders

- - Tips and tricks from the most successful developers weekly

- - Monthly issues, including all 90+ back-issues since 2012

- - Event discounts and early-bird signups

- - Gain insight from top achievers in the app store

- - Learn what tools to use, what SDK's to use, and more

Subscribe here